Data-driven SEO is just the starting point

Although there has been much talk within the industry about data-driven SEO, there is still very little emphasis on mathematics and on getting results that are statistically significant. In this section we’ll look briefly at some of the challenges faced by the SEO industry today.

Statistical rigour is too often missing from SEO technical audits

While much of SEO expertise is based on published best practices from sources such as Google Webmaster Tools, the way in which SEO consultancies operate are still too anecdotal and lack statistically significant rigour.

For example, it’s quite normal for an SEO agency to use a number of data sources or a single tool to perform a website audit once a month. Based on this, the SEO agency delivers a set of recommendations, often without reference to competitors. However, by using a single data sample, the agency potentially gains results that are biased and possibly ineffective. This is because a single audit of a client’s website once in a calendar month may measure the client’s site on a good or a bad day which would affect the legitimacy of the audit.

Given an effective technical audit can cover many variables such as mobile pagespeeds and content reading age, a more statistical approach would be to design data experiments for each variable. This means gathering data on each variable and understanding how often that variable is likely to change. This approach will inform how the sampling process should occur and therefore how often the data needs to be collected.

So while mobile pagespeeds should be collected at random times of the day on a daily basis, the content reading age of web pages, by contrast, would be resampled only as often as the website is updated.

SEO best practice is too generalised

Much of current SEO best practice is too generalised and fails to take into account the intricacies of how content resides within websites. For example, one of the most well known SEO practices is to keep title tags <title> to a maximum of 60 characters. However this generic advice takes no account of:

- The type of content: a long title could be acceptable for a blog page as opposed to a product or service page. Therefore, any SEO analysis needs to use segmentation to mathematically test for optimal title lengths according to content type.

- The industry: long titles may be the norm in some industries, in which case, there may be no statistical proof that the SEO recommendation is empirically worthwhile or even valid.

SEO best practices also rarely takes into account the reading age of content, a metric used by journalists and professional writers when creating content. Given that the SEO industry is keen to produce great content, in response to Google emphasising its importance in determining search rankings, this is surprising. It’s also not an isolated example. Many metrics are overlooked when it comes to evaluating content from an SEO perspective.

The SEO industry needs to be more unified and dynamic

Most SEO practitioners cannot agree on what comprises best practice. A [survey carried out by Moz](http://moz.com/search-ranking-factors ) asked leading industry experts about what ranking factors were influential in SEO. The results listed over 80 ranking factors of which the highest correlation was 0.39. This means that SEO practitioners could only agree in 1.3% of instances about which factors were influential for SEO.

While one agency will justify their SEO audit results using one set of criteria, another agency will disagree and promote their approach as more accurate and trustworthy. How can a client possibly know who to trust?

The fundamental problem here is one of measurement. As computer scientist Grace Hopper says: “One accurate measurement is worth a thousand expert opinions”. If SEO agencies make more use of mathematics (so they can really understand what the search engines are doing) and less use of opinion and statistically dubious best practices, then we would see a much clearer picture of what SEO techniques, ranking factors and approaches offer the best results to clients.

Google uses advanced mathematics – your SEO agency should too

One of the problems for the SEO industry at the moment is that much of its success (and failure) depends on how well it keeps up with the search engines. However, given the size of search engines like Google, and their pioneering of the most sophisticated technology, this is proving to be challenging.

One of the problems for the SEO industry at the moment is that much of its success (and failure) depends on how well it keeps up with the search engines.

How Google works

Google collects data on a trillion websites, some on a daily basis, in order to construct a dataset that is then used to help the search engine decide which web pages to rank in return to a search query.

To analyse that data, Google reports the use of at least 200 signals to evaluate various aspects of a web page in terms of content, site architecture, popularity metrics and so on.

These 200 signals are shared across a number of algorithms:

- Hummingbird

- PageRank

- Panda

- Penguin

- and others

Each algorithm will use mathematical techniques to achieve a certain purpose. For example, the Panda algorithm, according to its patent application, will use a technique known as Support Vector Machine (SVM) to mathematically sort between duplicated and unique content.

Searching out duplicate content online helps Google clean up its data set so that the true ranking worth of website content can be accurately calculated. This not only means weeding out plagiarised content but also penalising the manipulative attempts by sections of the SEO industry to fake popularity.

In particular, Google’s implementation of SVM is likely to work by training an initial set of web page documents that are human classified. The initial list is known as a trained dataset. The algorithm will then use maths to spot patterns common to content that are classified as duplicate or unique. The patterns will then be used to predict unique and duplicate content on an untrained dataset. Google will have chosen SVM over other classification algorithms such as K-means clustering by considering predictive accuracy, scale and other factors.

Once the analysis is complete, the algorithms will feed into Google’s overall ranking system, helping it to arrive at a content’s true likelihood in satisfying customer search queries.

Each algorithm will be updated either constantly or regularly as the internet changes due to user preferences, the evolution of manipulative SEO spam, and the appearance of technology that enhances the user experience of websites.

How a maths-driven SEO agency can keep up with Google

We founded maths-driven digital agency Artios because we want to make online marketing, including SEO, more predictable and quantifiable. Here are some examples of what we do:

We founded maths-driven digital agency Artios because we wanted to make online marketing, including SEO, more predictable and quantifiable.

Practice SEO like a search engine

Instead of approaching tagging in the usual way, that is by encouraging copywriters to insert keywords in the title tag, the body copy, the url, and so on, we try and approach tagging from a search engine perspective.

Search engines are capable of automatically tagging your HTML, text, or web-based content. They employ sophisticated natural language processing (NLP) technology to analyse your data, tagging your information in a manner similar to human-based tagging. Natural language processing (NLP) technology is capable of abstraction, understanding how concepts relate and tagging accordingly.

SEOs need to be make use of Natural Language Processing technology and data science to measure their client’s content alignment with search engines in order to ensure the best outcome for the client.

Therefore by deploying NLP to analyse content we can accurately assess: the extent to which the body content is optimised for the target keyword and the uniqueness of other site pages to avoid cannibalisation.

Drilling down for the detail

Using our algorithms to analyse two ranking pages for credit cards, we could see that search engines were 97.5% likely to associate one credit card’s page with the search phrase “Credit Card”. In contrast, the competitor had a zero probability of being associated with this term, despite the page mentioning credit cards and optimising the title tag as:

Title: Personal Credit Cards | Apply Online | MBNA

This example shows that making your content searchable requires much more subtlety than simply inserting keywords and tagging. Current search engine technology looks deeper into the inter-relationships and meanings of the words used in the copy. So, your content has to have a close semantic relationship to the keywords normally found in your title tag to stand a chance of ranking for that keyword.

Best practice advice alone is not enough to deliver SEO rankings, because best practice would result in the credit card competitor failing to rank well for their desired search term. This is not a good result if they are your client! SEOs need to be make use of NLP technology and data science to measure their client’s content alignment with search engines in order to ensure the best outcome for the client.

Read more about how our technology works

Identifying the game changers

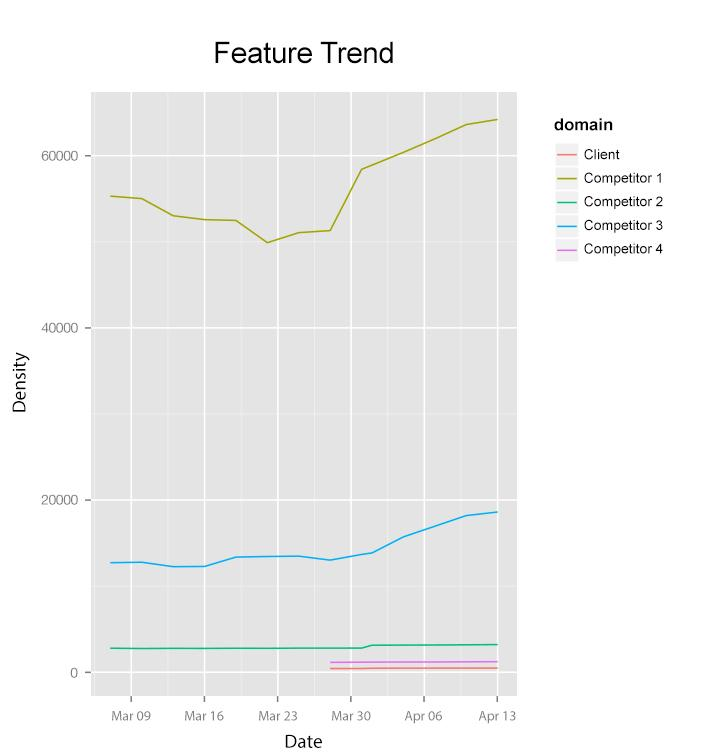

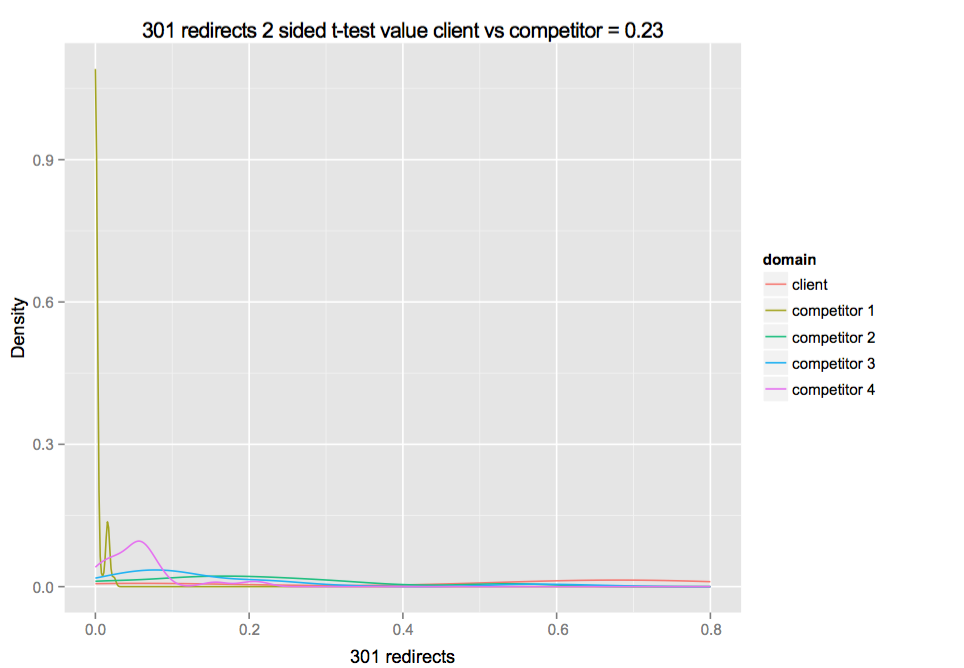

With so many ranking factors, it’s important to identify which specific factors make a difference to your traffic, and this involves selecting the right methodology.

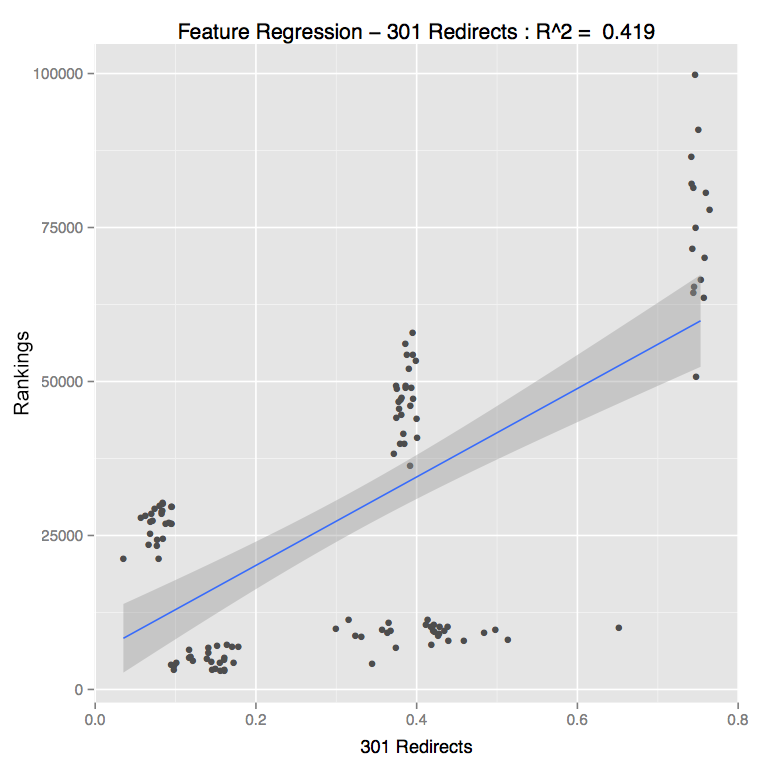

According to the graph above, there is less than a 95% chance of the site tested being different to their competitors in terms of 301 redirects. Therefore there is no evidence to suggest that the client’s site suffers from 301 redirect issues. However, this method of evaluating a metric is not robust enough to offer accurate results.

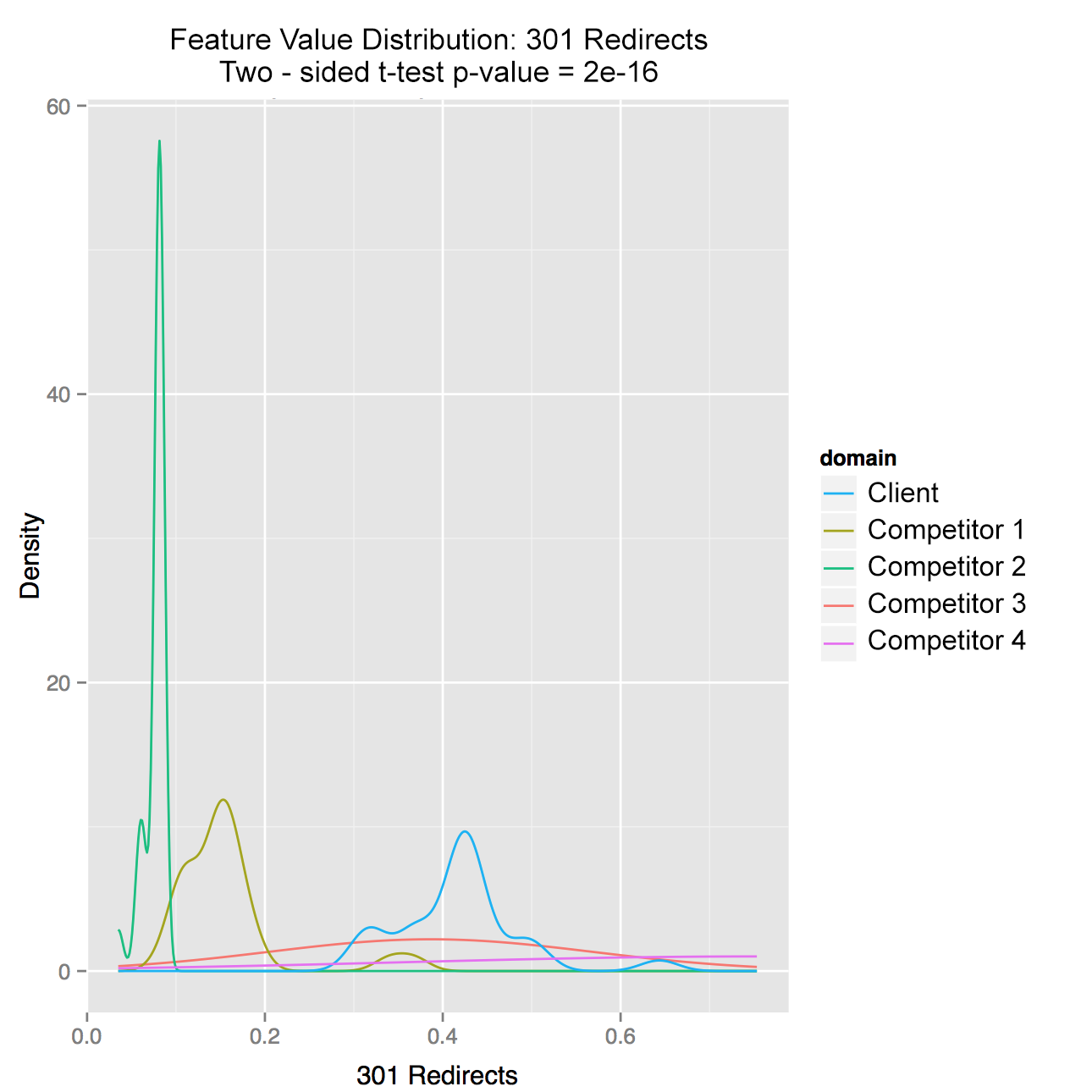

A more fruitful approach to determining important factors for your rankings is to appraise how the rest of the site pages vary from your site average – your site’s pattern is known as the distribution. Then you compare your ‘distribution’ to your competitors and conduct tests to see if there is a significant statistical difference.

In this graph, featuring the same client and competitors, 301 redirects are indeed shown to be a potential game changer as the statistical difference between the client and the leading competitor having the same average is virtually zero.

Comparing yourself against your competitors for each and every potential website change, and then applying statistical tests, will help you identify the game changers that will genuinely improve your search rankings.

Prioritising SEO recommendations

Your SEO agency could come up with many potentially game-changing insights in their SEO audit, but how are these prioritised?

Here at Artios, we establish the probability of every single recommendation increasing your traffic using maths. This means that the changes can be prioritised in order so that you can work on ‘quick wins’ first.

The chart below, for example, shows that there is a 42% probability of our client increasing their ranking by reducing the proportion of permanent redirecting pages on their website to the overall number of site pages (anything over .14 is deemed statistically significant).

Quantifying the predictions is also important. The regression analysis above shows how much of our client’s traffic increase can be explained. In the above case, 42% of a change in traffic is explainable by 301 redirects.

Verifying our SEO analysis

Although correlation doesn’t equal causation, this is where our artificial intelligence algorithms kick in. By tracking the changes in variables over time (as shown above) both in terms of the predictors and the outcome means we can establish:

- The SEO recommendations that delivered performance

- The amount of increased traffic

- The accuracy of the predictions

- The length of time required for those changes to take effect

In order to ensure we derive the correct meaning from models and data, we also check the actual effect on traffic against the predicted levels of traffic.

Artificial intelligence algorithms are also notable for their learning function: for example, we use the gradient descent where the algorithm learns through a number of steps until the margins of prediction error are at minimalised.

Given the data rich nature of SEO, and the mathematical foundations of the technologies available, it’s increasingly clear that mathematical techniques should form the basis for any decisions about how websites are optimised for search engines.

Conclusion

SEO best practice is changing fast – so fast, that it’s no longer enough to tweak your SEO tactics to make them a little better. Instead, you need a sophisticated maths-centric approach to SEO that will deliver statistically-significant and tangible results, no matter what algorithms the search engines decide to employ. Make sure your company wins rather than loses out from changes to the digital landscape through a maths-driven approach to SEO.

Want to grow your business through maths-driven SEO? Get in touch! We’d love to hear from you.